Boosting Network Trust: Unveil AI TRiSM with Network Copilot™

Introduction to AI-TRiSM (Trust, Risk & Security Management)

As AI reshapes the world, its transformative power drives revolutionary innovations across every sector. The benefits are immense, offering businesses a competitive edge and optimizing operations. However, to harness this potential responsibly, we must prioritize ethical and trustworthy AI development and usage.

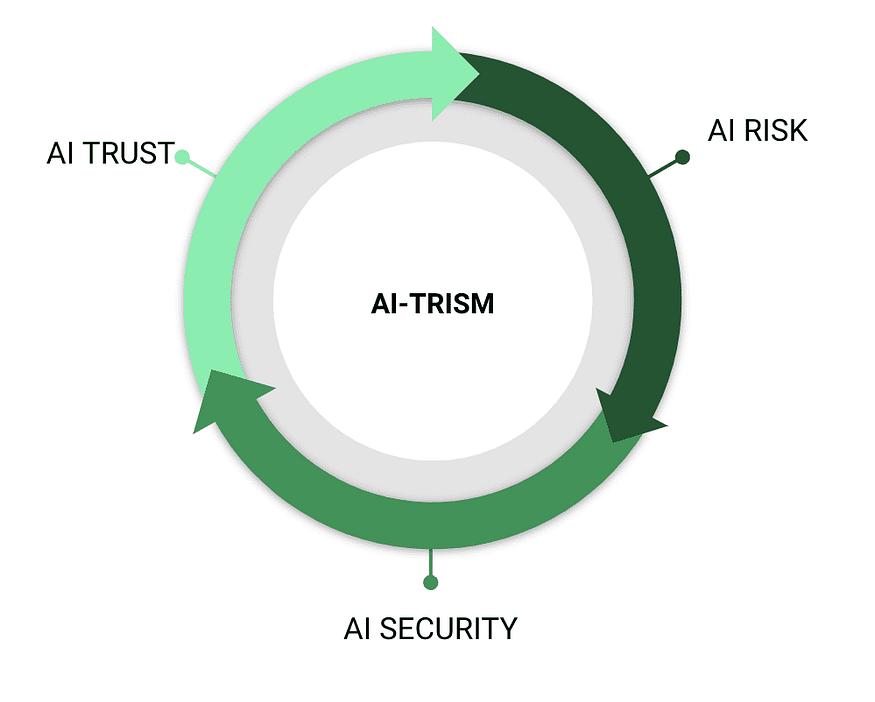

This is where the concept of AI-TRiSM, a framework conceptualized by Gartner, emerges as a cornerstone for responsible AI development. It emphasizes three crucial concepts: Trust, Risk, and Security Management (TRiSM) in AI systems. By focusing on these key principles, AI TRiSM aims to build user confidence and ensure ethical and responsible use of technology that impacts everyone.

The Framework of AI TRISM

By embracing AI TRISM, organizations can navigate the exciting world of AI with confidence, maximizing its benefits while ensuring responsible and ethical use.

- 1. AI Trust: This cornerstone emphasizes transparency and explainability. By ensuring AI models provide clear explanations for their decisions, users gain confidence and trust in the technology.

- 2. AI Risk: This pillar focuses on mitigating potential risks associated with AI. By implementing strict governance practices, organizations can manage risks during development, deployment, and operation stages, ensuring compliance and integrity.

- 3. AI Security Management: This crucial aspect focuses on safeguarding AI models from unauthorized access, manipulation, and misuse. By integrating security measures throughout the entire AI lifecycle, organizations can protect their models, maintain data privacy, and foster operational stability.

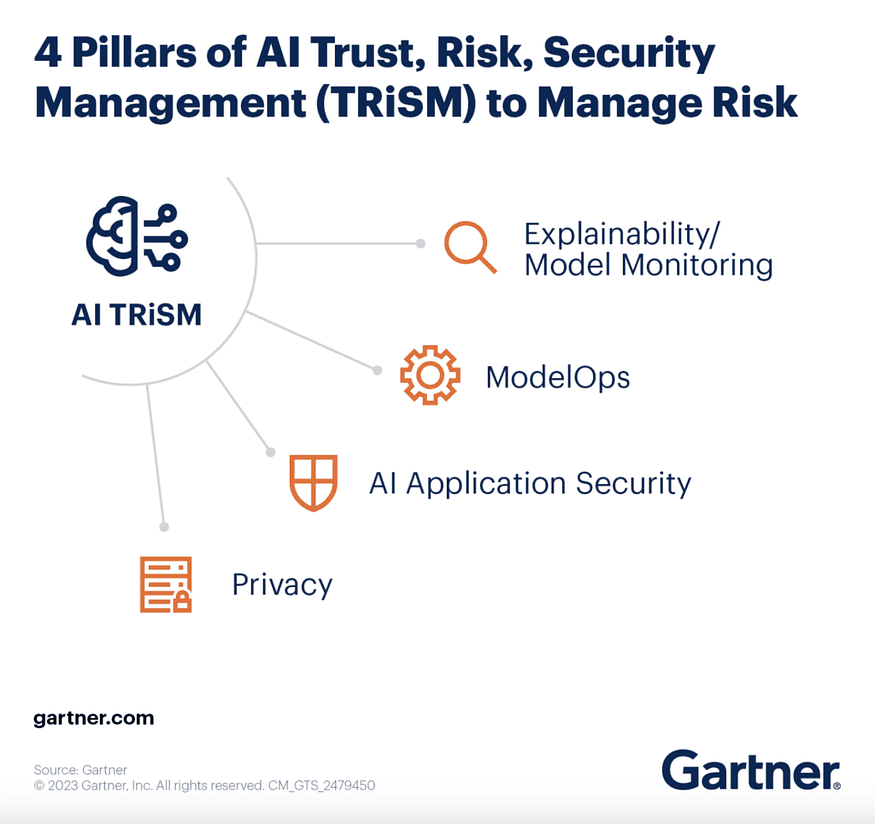

4 Pillars of AI TRiSM Framework

This framework relies on five key pillars to ensure responsible and ethical implementation of AI:

- 1. Explainability and Model Monitoring: This pillar combines two crucial elements. Explainability ensures transparency in how AI models arrive at their decisions, building user trust and facilitating performance improvement. Model monitoring involves continuously tracking the model’s behavior, identifying any biases or issues impacting its accuracy and effectiveness.

- 2. ModelOps: This pillar focuses on establishing a well-defined lifecycle management for AI models. It encompasses the entire journey, from development and deployment to ongoing monitoring, maintenance, and updating. Robust ModelOps practices ensure the continued reliability and effectiveness of AI models over time.

- 3. AI Application Security: This pillar safeguards the integrity and functionality of AI models and their applications. It involves implementing security measures throughout the AI lifecycle to protect against unauthorized access, manipulation, and misuse. This ensures the reliability of the model’s outputs and protects sensitive data.

- 4. Privacy: This pillar emphasizes the responsible handling of data used in AI models. It involves ensuring compliance with relevant data protection regulations and implementing appropriate security controls. By prioritizing data privacy, organizations can build user trust, minimize the risk of data breaches, and ensure responsible AI development and deployment.

Adopting AI TRiSM Methodology for Network CopilotTM

Network Copilot transcends the typical tech offering. It’s a conversational AI crafted to meet the complex demands of modern network infrastructures. Its design is LLM agnostic, ensuring seamless integration without disrupting your current systems, and doesn’t demand a PhD in data science to get started. Engineered with enterprise-grade compliance at its core, it offers not just power but also reliability and security.

Dive deeper today because with Network Copilot™, you’re getting seamless integration, enterprise-grade reliability, and enhanced security — all with ease

1. Documentation of AI Model and Monitoring:

To ensure the successful use and management of the Aviz Network Copilot, comprehensive and up-to-date user manuals, technical documentation, and training materials are created. This includes detailed documentation explaining how the AI model makes decisions. Additionally, a clear privacy statement is provided guaranteeing that Network Copilot will not access, transfer, or manipulate sensitive information such as passwords.

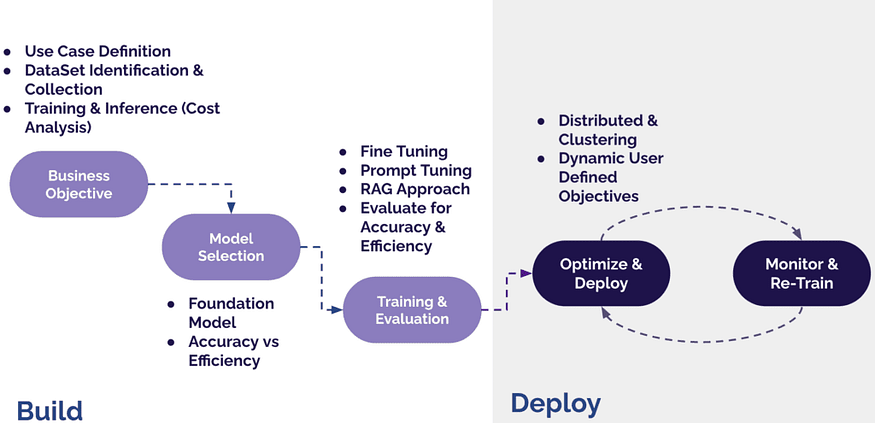

2. Well-defined Life Cycle Management:

A well-defined life cycle management process is established for the Aviz Network Copilot product, encompassing all stages from its Building to Deployment. This process will involve defining clear criteria for use case identification, dataset identification, model training, Model selection, Model deploy, Monitor and re-train, a communication plan in place to effectively inform users about any product changes and updates.

3. System Checks and Bias Balancing:

To mitigate potential biases in the Aviz Network Copilot product, regular system checks are conducted such as Gaurdrails. This involves testing the product with diverse datasets and user groups to identify and address any bias that may arise. A bias mitigation strategy is developed and implemented, incorporating techniques such as data normalization, algorithm adjustments, or fairness checks. Furthermore, the performance of the Aviz Network Copilot product is continuously monitored to identify any emerging biases or fairness issues.

4. Responsible Handling of Data:

To ensure responsible data handling practices, robust security measures are implemented for the Aviz Network Copilot product. These measures include encryption, access controls, and regular security audits. Furthermore, clear guidelines are established to define how user data will be collected, stored, used, and shared. Additionally, informed consent will be obtained from users before collecting and using their data. Finally, users will be provided with clear and accessible information about their data privacy rights and how they can exercise those rights.

Conclusion

Aviz Network Copilot prioritizes responsible AI practices through its commitment to the AI TRiSM framework, emphasizing Trust, Risk, and Security Management (TRiSM) throughout its lifecycle. This ensures transparency by providing clear explanations for the AI model’s decisions, fostering user trust. The potential for bias is mitigated through regular system checks and a dedicated bias mitigation strategy. Additionally, robust security measures safeguard the model and user data, further demonstrating Aviz Network Copilot’s commitment to responsible AI development and user confidence.

FAQ’s

1. What is AI TRiSM and why is it important for responsible AI in networking?

Answer: AI TRiSM (Trust, Risk, and Security Management) is a framework that ensures AI models are developed ethically and responsibly.

It builds user trust, manages risks, and ensures security throughout the AI lifecycle — especially crucial for AI solutions like Network Copilot in critical infrastructure environments.

2. How does Aviz Network Copilot implement AI TRiSM principles?

Answer: Aviz Network Copilot follows AI TRiSM by:

- Documenting AI model behavior

- Monitoring model performance and bias

- Managing lifecycle from training to deployment

- Protecting user data with strong encryption and consent-based practices

This ensures a secure, transparent, and trustworthy AI solution for network management.

3. What security measures are in place to protect user data in Network Copilot?

Answer: Network Copilot uses:

- End-to-end encryption

- Strict access controls

- Regular security audits

Users’ data is collected only with informed consent, ensuring full compliance with privacy best practices and regulatory standards.

4. How does Network Copilot address AI model bias and fairness?

Answer: Aviz Network Copilot runs:

- Regular system checks

- Guardrails testing with diverse datasets

- Bias mitigation techniques like normalization and fairness algorithms

This keeps the AI model balanced, inclusive, and accurate across different network environments and user groups.

5. Why should enterprises choose an AI-driven network assistant that follows AI TRiSM guidelines?

Answer:

Choosing a TRiSM-compliant AI like Network Copilot™ means:

- Greater trust in AI-driven decisions

- Reduced risk of compliance breaches

- Stronger data protection and security resilience

- Future-proofing network operations with ethical, explainable AI innovation

Comments

Post a Comment